The arrest and extradition of Venezuelan President Nicolás Maduro generated the kind of information environment that has become typical of major geopolitical events: within 48 hours, Graphika’s intelligence team observed AI-generated videos, fabricated images, and repurposed footage circulating across platforms. This content was shared by both engagement-driven accounts and ideologically motivated users, who may or may not have been aware of the origin of the information they were sharing. "What we're seeing so far is quite typical for high-attention geopolitical events: tactics designed to shape narratives and generate engagement while the ground truth remains fluid," as Graphika CEO Guyte McCord explained in an interview.

With generative AI, the volume of synthetic content that can be produced and disseminated in a short time frame has grown exponentially. Insights published on our Graphika platform highlight three themes in misleading content posted in the days following Maduro's arrest: highly emotional AI-generated videos of Venezuelans celebrating, fabricated images of law enforcement taking Maduro’s mugshot, and repurposed U.S. protest footage presented as a current response to U.S. intervention.

Understanding how this content spreads is critical because the tactics aren't unique to geopolitical events. These behaviors often emerge during brand crises, election cycles, and financial scams. Misleading content posted after Maduro's arrest and extradition serves as a case study in a playbook that applies broadly.

AI-Generated Videos Depicting Venezuelans Celebrating

Shortly after news of Maduro's capture broke, Graphika analysts saw that videos began circulating on TikTok that appeared to show Venezuelan citizens crying in celebration on the streets, overwhelmed with emotion. The videos exhibited indicators consistent with AI generation, including glitching hands, distorted flags, and errors in perspective and scale.

We identified multiple TikTok accounts sharing these videos; at least six accounts showed posting activity only since December 2025. Before the Maduro content, these six accounts had also posted videos of parents and babies that appeared to be AI-generated. This pattern suggests the accounts were not created to push a specific political narrative, but rather to capitalize on emotionally captivating topics in an effort to gain followers.

We were able to trace the network of accounts sharing these videos because the English-language captions were identical and had a distinctive formatting error of no spaces between sentences:

"The moment of liberation.Venezuelan citizens reacting to the news with overwhelming emotion, marking a potential turning point for the country.#WorldNews #VenezuelaCrisis #Geopolitics#MaduroCaptured."

The content subsequently appeared on other platforms, such as a Facebook page created on January 4, 2026, that shared an identical video with the same caption we saw on TikTok. Authentic accounts on Instagram and politically aligned accounts on Facebook and X shared the same or similar videos, without the original caption, demonstrating how imagery that may have been created solely for engagement can be repurposed for political messaging.

Several TikTok accounts used the same caption when sharing likely AI-generated videos featuring crying Venezuelans celebrating Nicolas Maduro's capture.

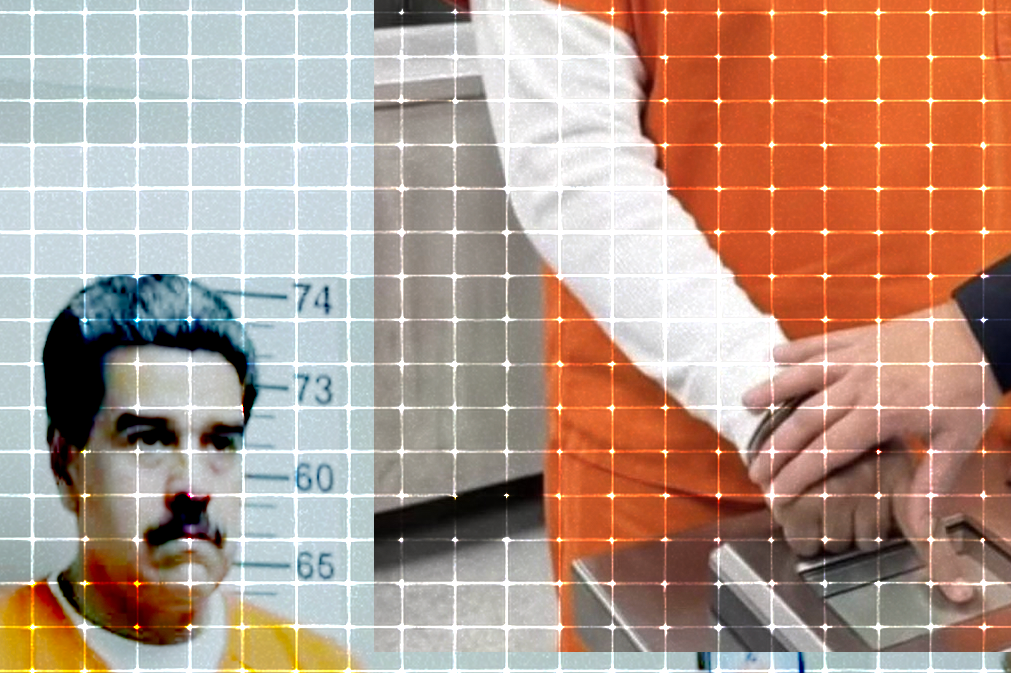

Fabricated Booking Images

Shortly after Maduro’s capture, images began circulating that depicted him wearing an orange jumpsuit in what appeared to be a law enforcement booking procedure. Graphika assessed these images were likely AI-generated or digitally manipulated based on multiple inconsistencies: the height charts visible in the images used metric measurements, not used in the U.S., and the numbers on the scale of the chart are nonlinear and at irregular intervals.

A Facebook page named as a news feed posted the likely AI-generated image, with reactions including heart emojis and laughing emojis.

Predominantly Spanish-language accounts on Facebook, Instagram, Threads, X, and YouTube were the first we found sharing identical or near-identical versions of these images. Some accounts that circulated the images presented themselves as breaking news outlets, using generic branding, headline-style captions, and formatting similar to legitimate news coverage. At least one version of the imagery appeared in an article published by Yahoo! Taiwan, where it was presented as factual coverage, demonstrating how non-verified images proliferating on social media can get picked up by news outlets and framed as actual documentation of an event.

Likely AI-generated booking image appearing in Yahoo! Taiwan coverage of the event. Caption under image translates to: “The New York Times pointed out that when the judge asked Maduro to confirm his identity in court, he clearly called himself "President of the Bolivarian Republic of Venezuela" in Spanish and reiterated that he was illegally detained. Picture: Reprinted from X account@FactNews890604”

Opponents of Maduro used these images to spread their messages, framing them as evidence of justice and accountability. One X post that shared the images while referencing regional political figures, including Mexican President Claudia Sheinbaum, received 1.4 million views.

As shown in our report Cheap Tricks: How AI Slop is Powering Influence Campaigns, the use of AI tools can help create inauthentic content at scale. This speed and the ability to tap into a popular topic and coalesce around a narrative are more important than its quality or accurate details. People will engage with AI-generated content that supports their point of view—it doesn't have to be hyperrealistic. In this booking images example, the content being fabricated was detectable without sophisticated tools, and was also compelling enough to be shared by people expressing their political sentiment. Seemingly because of its proliferation on social media, it was then cited as actual documentation by a legitimate news outlet, despite some tell-tale signs of AI glitches.

Repurposed Protest Footage

Not all misleading imagery is generated by AI. Repurposing media from other contexts is another narrative strategy commonly employed in politically charged and/or rapidly evolving events, where updates are constantly changing.

A cluster of ideologically aligned X accounts located in Yemen, including an account belonging to a member of the Yemeni Zaydi Shia Islamist movement, posted aerial footage of large crowds in multiple U.S. cities. The accompanying captions claimed the footage depicted Americans protesting against Maduro's capture and U.S. intervention in Venezuela. The footage was authentic but originated from the "No Kings" marches that took place in multiple U.S. cities on Oct. 18, 2025, not January 2026. However, some of the posts featured captions claiming the video depicted Americans protesting Maduro’s capture.

Screenshot of "No Kings" protests footage posted to TikTok by Colombian politician Rafael Navarro, using his own campaign logo to cover the "No Kings" logo in the upper-left corner.

Some posts retained the original "No Kings" logo in the upper left corner of the footage. Others used a version where the logo had been obscured. Graphika traced this variant to Colombian Senate candidate Rafael Navarro, who posted the footage to TikTok on January 5 with his campaign logo covering the original branding. Navarro's post included no caption or contextual information. Pro-Maduro accounts, both Spanish-speaking and English-language, subsequently repurposed Navarro's version with captions framing it as anti-intervention protest footage.

Example of an Arabic-language post misrepresenting "No Kings" footage as depicting U.S. opposition to Maduro's capture. Headline reads: “Breaking | Massive U.S. Protests Against Trump's Military Intervention in Venezuela 🇺🇸✊”

Additional Arabic-language X accounts amplified a TikTok post by pro-Palestine content creator Majdi Shomali, adding captions such as "America in chaos protesting Trump's policy" without clarifying that the footage predated Maduro's arrest.

Unlike the engagement-driven TikTok accounts that featured emotional videos in the first example, the accounts spreading this repurposed footage appeared to be ideologically motivated, using the material to support a narrative opposing U.S. foreign policy and showing misleading evidence that U.S. citizens share this sentiment regarding the events in Venezuela.

Why This Matters

These three cases illustrate how accessible generative AI tools, platform incentives for engagement, and a fast-moving news environment combine to create conditions where misleading content spreads quickly with or without centralized coordination. The proliferation of this content can solidify shared sentiments across an ideologically-aligned community, but also confuse fact from fiction as it works its way into mainstream news stories and is presented as verified documentation.

High-profile geopolitical events, such as the Maduro arrest, offer a window into how these dynamics play out in real-time. However, the same tactics are employed across various contexts, including brand crises, financial scams involving deepfakes of well-known investors, and foreign influence campaigns.

Graphika tracks emerging narratives, synthetic media, and coordinated activity across social media platforms in real-time. To learn how our tools and analysts can help your team monitor the information environment around events that matter to you, book a demo.