How financial analysts can cut through the noise and act on what matters most

In 2025, fraud on social media has evolved into a fast-moving, cross-platform threat that’s harder to detect, harder to categorize, and harder to stop. Scammers are combining old-school phishing techniques with new-school tech — from AI-generated deepfakes to hybrid physical-digital tactics — to bypass detection and scale deception.

For financial institutions and fraud teams, the challenge isn’t just spotting these scams. It’s organizing and prioritizing them. At Graphika, we use our ATLAS platform to uncover and categorize instances of frauds leveraging social media at scale. We track tactics, surface key indicators of compromise (IOCs), and help analysts detect coordinated activity even when it’s niche and has not yet become mainstream.

Here are some of the top scam trends reported in 2025 — and what they mean for analysts monitoring today’s threat landscape.

1. AI-Enabled Impersonation and Deepfake Scams

One of the most dangerous evolutions in 2025 is the advancement of AI-generated impersonation fraud. Scams enabled by AI exploit the credibility of trusted financial figures using synthetic video and audio to create highly convincing messages — often targeting investors.

- Ripple & Strategy Executive Deepfakes: Scammers circulated fake livestreams featuring Brad Garlinghouse, the CEO of blockchain-payments company Ripple, and Michael Saylor, the executive chairman of business intelligence firm Strategy, urging users to send crypto in return for “double rewards.” These deepfakes mimicked the tone, language, and visual style from real public appearances and were timed to coincide with major crypto events like the Bitcoin 2025 conference.

At least three X accounts that appear to impersonate Ripple or CEO Brad Garlinghouse shared the same video clip of Garlinghouse that used very likely AI-generated audio to portray as promoting a fraudulent giveaway.

- Forex Influencer Impersonation: Deepfake videos of financial analyst Kathy Lien promoted a fraudulent trading bot claiming up to 1,200% returns on investments. The scam used YouTube and Facebook posts to target new retail investors.

- Fabricated Coaches & Testimonials: Fake trading “experts” on Facebook used stolen images, fake reviews, and impersonation of well-known investors to lure users into schemes.

➡ Why It Matters: These scams often look legitimate and can spread quickly. Traditional keyword alerts can’t detect deepfakes or cloned profiles — but coordinated indicators and narrative mapping can.

2. Platform Impersonation and Phishing on Hard-to-Track Apps

Scammers are creating fraudulent versions of legitimate financial services, especially on messaging platforms like Telegram and WhatsApp, which are difficult to monitor.

- Telegram Clone of Decentralized Finance Platform: A Telegram group mimicked the branding and messaging of cryptocurrency wallet manager Pawtato Finance, directing users to phishing links under the guise of an ambassador program. The fake group grew to over 9,000 members.

- Silent Hill Instagram Ad Scam: A phishing campaign targeted gamers with an Instagram ad for a closed beta test of a new Silent Hill video game. Mobile users were directed to a spoofed Steam login page, while desktop visitors were redirected to the real site, helping the scam avoid moderation.

Image of the Instagram ad targeting Silent Hill fans, as posted by a Reddit user. The link on the ad directed users to a page that harvested their Steam credentials.

- Facebook Deepfake Ad Targeting Finance Followers: An inauthentic Facebook page impersonating finance expert Martin Lewis used Meta ads to promote a deepfake video of Lewis falsely claiming to run a WhatsApp group that shares stock tips and market insights. The video received over 1 million views, with ad call-to-action buttons directing users to WhatsApp. The page rebranded as “Money Saving Expert,” borrowing the name of Lewis’s legitimate consumer advice platform.

➡ Why It Matters: These tactics evade traditional takedown methods and rely on trust in familiar platforms. Financial fraud teams need visibility into non-standard delivery, like social media or messaging apps.

3. Exploitation of Financial Institutions and Government Brands

Scammers continue to weaponize trust in banks and government agencies. These scams often target consumers directly, using urgency and fear to prompt risky behavior.

- Chase/Zelle Fraud Calls: Scammers impersonate Chase bank employees, spoof caller IDs, and guide users through deceptive "fraud recovery" steps that result in money being sent via Zelle. Victims are even given follow-up “security” instructions to reinforce trust.

- USAA Impersonation: Fake alerts about “account locks” are delivered via SMS or phone, directing victims to phishing pages or bogus hotlines to steal credentials.

- IRS Stimulus Scam: Capitalizing on the IRS’s March statement about remaining pandemic payments, scammers sent text and Facebook messages urging users to claim a $1,400 credit through a spoofed IRS site. The page harvested Social Security numbers and banking data.

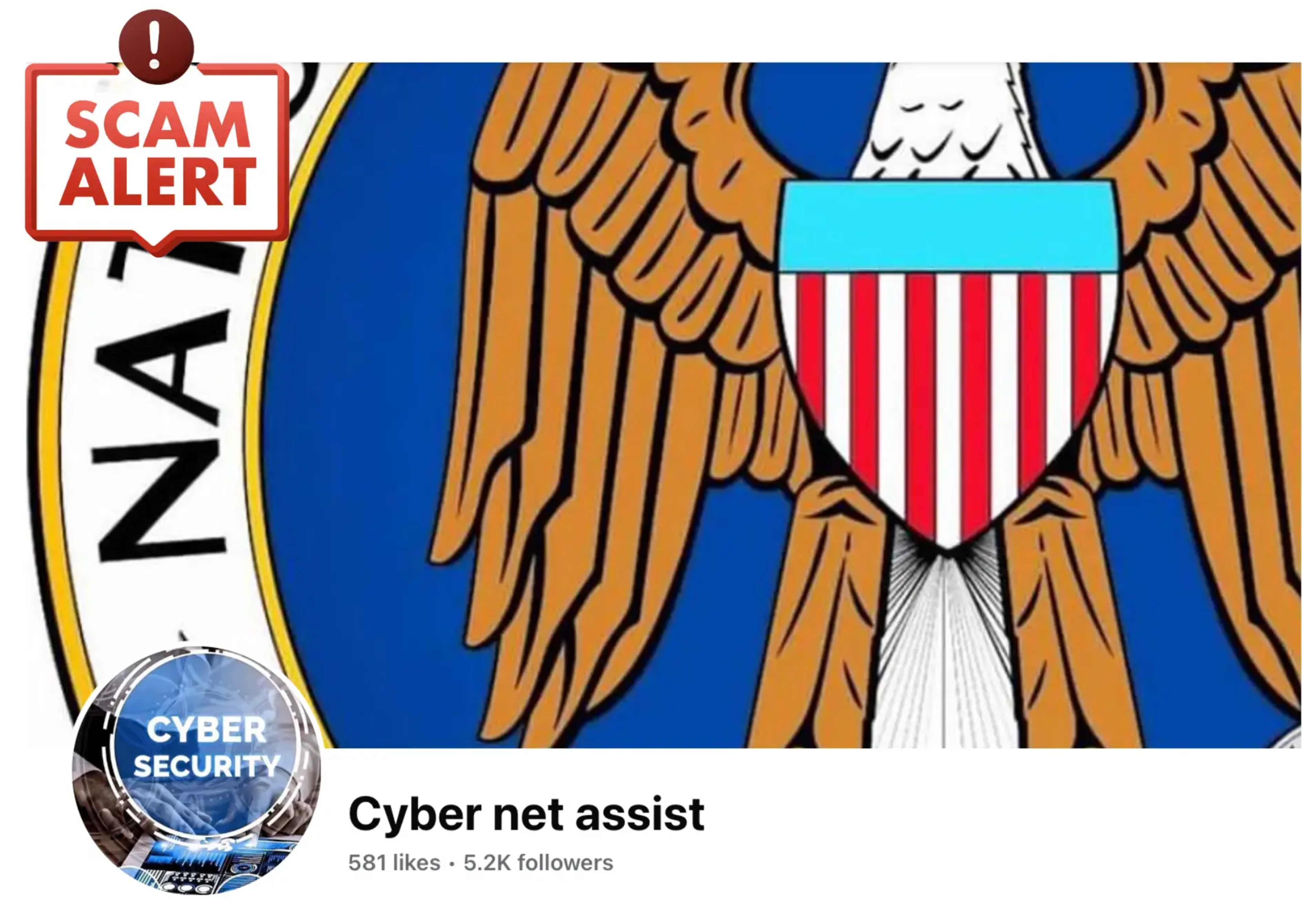

A reply to a Facebook user's post about a U.S. tax scam contained a hashtag leading to an account of a purported professional cybersecurity service.

➡ Why It Matters: These scams operate at scale and often exploit real-world timing. Analysts need contextual intelligence to track which narratives are gaining momentum and where they will likely break next.

4. Paid Ads and Virality as Attack Vectors

Scammers are paying for ads to reach victims and then using social networks to amplify their messages, often in communities where victims are already vulnerable.

- Counterfeit Currency Ads: Paid Facebook and Threads ads promoted the sale of fake Indian rupees using visual evasion techniques like handwritten notes and overlaid contact details. Users were directed to Telegram for further steps.

An Instagram post advertising counterfeit Indian rupees, overlaying contact details on the image in a likely effort to avoid content moderation.

- Romance Scams with Celebrity Personas: Scammers impersonated celebrities like Keanu Reeves and fabricated fake military or humanitarian profiles to initiate long-term emotional cons, often moving victims from Facebook to WhatsApp or Telegram.

- Tax Season ‘Fund Recovery’ Hoaxes: Fraudsters posed as cybersecurity experts or past victims in tax fraud discussions, offering to “help recover stolen funds” — only to defraud users again.

➡ Why It Matters: Paid ads can give scammers legitimacy. Once they gain traction, narratives spread organically through comments, hashtags, and shared content. Teams need to monitor tactics, techniques, and procedures (TTPs), not just static search terms.

What Financial Intelligence Teams Need

If you rely on keyword monitoring, manual research, or fragmented alerts from takedown vendors, you’re likely overwhelmed and underinformed.

Common challenges we hear from financial teams:

- Alert fatigue: Too many alerts, with no prioritization or categorization

- Manual workflows: Time-consuming tracking across platforms and private groups

- Leadership pressure: Need to brief leadership quickly on both direct and industry-wide threats (e.g., “What happened to Bank XX?”)

- Lack of tactical insights: Desire for IOCs, TTPs, and evidence-based intelligence to shape internal rules and detection logic

How Graphika Helps

Graphika’s ATLAS platform is built for intelligence teams dealing with real-time, cross-platform manipulation threats. We help you:

✅Detect and monitor scams before they gain traction

✅ Track campaigns across network-centric and open-source platforms

✅ Understand narrative exposure and amplification trends

✅ Surface signals and TTPs that inform prevention and response

✅ Gain situational awareness beyond your brand — across your sector

🔍 Ready to cut through the noise?

See how Graphika supports threat intelligence, fraud, and trust & safety teams in financial services and beyond.

Book a demo to explore live campaign tracking, threat categorization, and actionable intelligence you can brief on immediately.